Complication, Complexity, and Chaos part 3: The "AI Sweet Spot"

May 20, 2023•1,117 words

Now that we've established a basic understanding of some systems context, we can use it to draw useful conclusions.

Let's start by establishing a coherent and somewhat meaningful definition of "AI": statistical and computational techniques that allow humans to "train" and "generate" algorithms to work in problem spaces where we cannot directly write useful algorithms, and the vast, inscrutable algorithms which are generated by these techniques. Though the specifics may vary, every AI model is at its core a way to process quantified data and return a quantified output.

This is in contrast to, say, "formal algorithms", which are programs directly written in code by humans, and debugged through the manual readjustment of said code. Formal algorithms have been around for decades, and they've brought a great deal of value to a great many people. Though there are problem spaces where they do not do well, there are plenty where they do, and they benefit from the significant advantage that a qualified human can understand what they're doing in great detail, and make changes as needed.

Make no mistake, our ability to develop "AI" is a significant development on its own, as it enables us to put algorithms to work against problems which we could not previously write formal algorithms to solve. There are plenty of these problems! For example, literal translation of texts from one language to another, or image recognition, or fraud detection at scale, are all problems which formal algorithms have struggled to solve for decades, and AI techniques are already doing much better.

However, some sense appears to have gotten lost in the flood of hype lately. The first (hopefully uncontroversial1) statement we can make is that every "AI" tool is, in fact, an algorithm. This means it is constrained by all the algorithmic limits we discussed in previous posts; it will do best in complicated, or complex, environments where the problem space is well-defined and the parameters are quantifiable. In chaotic environments, and/or against ill-defined problems or unquantifiable parameters, it will fail in ways both dramatic and mundane. On the other hand, in problem spaces where formal algorithms are able to operate effectively, an informal algorithm will be a more expensive and less reliable way to solve the problem. For example, imagine trying to train a large learning model to do arithmetic accurately. You probably could do this reasonably well, given enough hardware and effort, but the best possible result would be an expensive and overengineered calculator which is still less reliable than any of the thousands of calculator programs which already exist.

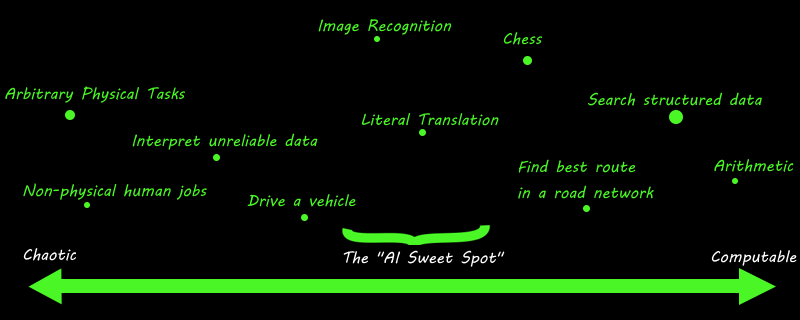

So what this means is that, in the space of all possible problems, the space where AI can be actually beneficial is rather narrow. We can rank interesting problems more or less on a spectrum, based on how well-defined they are and how quantifiable the parameters are, as a visual aid:

This isn't exactly a scientifically rigorous schematic2; many of the placements on this chart are somewhat subjective. But hopefully it serves to convey the main point, which is that AI only provides a value add in certain circumstances. If you go too far to the right, where the problems are too easy to define and quantify, any AI model will lose to formal algorithms in terms of efficiency and accuracy. Meanwhile, if you go too far to the left, where the problems exist in a chaotic space, the inherent limitations of algorithms will become rapidly apparent and you'll see all manner of boneheaded failures.

Unfortunately for many investors and corporations, this concept is not one which is popularly known, or paid much attention in tech and tech-adjacent circles. Recent successes in the AI field, specifically the development of new informal algorithms which can act against problems where formal algorithms struggle, have fueled rampant speculation that algorithms will be able to solve all manner of problems that, by their very nature, they will be unable to. At the more "reasonable" end of the spectrum3, this has manifested in wildly inflated projections about the potential revenue to be gained from the development of AI technologies, while at the far end, various deranged techno-cultists are speculating that the long-prophesied Era of Machines is imminent and that algorithms running on electronic hardware will render organic life obsolete.

With a realistic perspective, we can see that all of this speculation is based on faulty premises. In fact, it is likely that the recent flood of investments into AI4 is chasing a level of returns that will never materialize. In fact, it's a risky industry to be in; multiple global megacorps are launching their own AI divisions5 at the same time a horde of startups are flooding into the space, backed by the battered but still formidable remnants of the Silicon Valley venture capitalist scene. Various parasitic species of hustle bros and marketing gurus and would-be regulators have also caught the scent of potential future wealth and are trying to place themselves in an optimal position to extract as much of the profits as they can.

So on the business side, anyone trying to build a fortune in AI will end up facing a lot of obstacles. If you pick a problem to work on that's too far out of the sweet spot, you'll end up either getting out-competed on efficiency and reliability by more formal algorithms written by old-school software companies6 or trying to undercut human labor on price and scale with a product that is strictly worse. And no matter which strategy you pick, you'll be competing against well-organized and well-capitalized opposition who will try to crowd you out of the handful of profitable opportunities that do show up.

In conclusion, if you are trying to do something clever in or with AI, you should probably find something better to work on instead. The world suffers from no shortage of problems; maybe pick one and try to solve it in a more old-fashioned way. It will probably be less glamorous for a year or three, but once the whole AI thing falls apart like so many utopian tech fantasies before, you'll have a golden opportunity to be real smug about something.

-

Actually quite controversial in certain circles. ↩

-

Or an "artistically good" one. ↩

-

Very literally. ↩

-

And anything which happens to have those two letters somewhere in its name. ↩

-

At least in part due to a crippling lack of other opportunities for investment and expansion. ↩

-

At least some of whom are actually very good at what they do. ↩